Apache Flink Example With Java In Flink Cluster

Posted By : Rahbar Ali | 29-Sep-2018

Apache Flink is an open source framework an distributed processing engine for stateful computations over limited & unlimited data

1- Limited data-> means the data who have both start and an endpoint is called Limited data or Bounded data.

2- Unlimited data-> means the data who have start point but not have any endpoint that called Unlimited data or Unbounded data.

let assume an exam suppose we stand at the road and count number of a vehicle moving on that point so there is no limit of

a vehicle that passes on that road so this type data is called Unbound data.

Apache flink process both type data with in-memory speed.

you can download apache flink

http://mirrors.fibergrid.in/apache/flink/flink-1.6.1/flink-1.6.1-src.tgz

after download extract it & go to this location

/Downloads/

now start with this command

./start-cluster.sh

now open

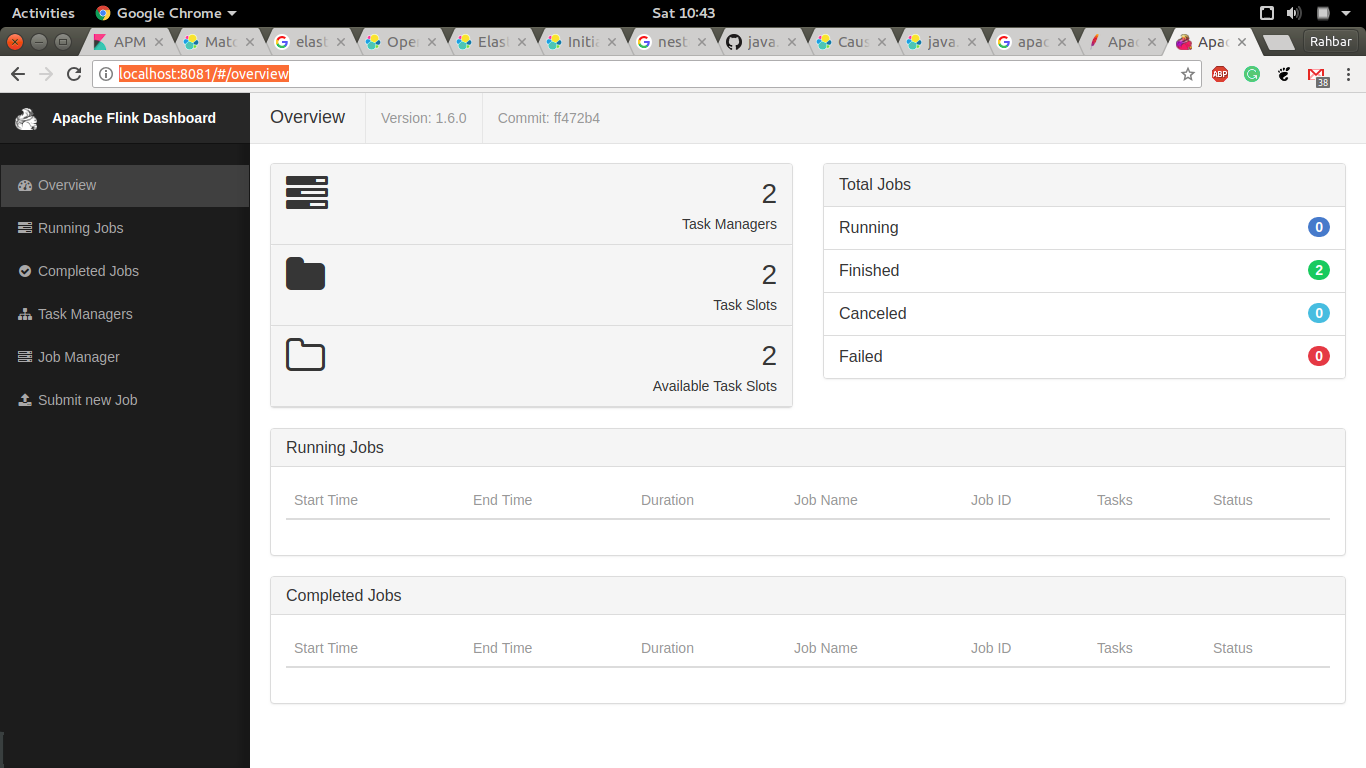

http://localhost:8081/#/overview

you will

Now we are going to create a sample project that use Apache-Flink framework to process data from a file & gives the result

-DarchetypeGroupId=org.apache.flink \

-DarchetypeArtifactId=flink-quickstart-java \

-DarchetypeVersion=1.6.1

After creating

open StreamingJob class & update this code on that file.

package com; import org.apache.flink.api.java.DataSet; import org.apache.flink.api.java.ExecutionEnvironment; import org.apache.flink.api.java.aggregation.Aggregations; import org.apache.flink.api.java.tuple.Tuple2; public class StreamingJob { public static void main(String[] args) throws Exception { final ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment(); DataSet<String> text = env.readTextFile("/home/rahbar/Desktop/flink.txt"); DataSet<Tuple2<String, Integer>> counts = text.flatMap(new LineSplitter()) .groupBy(0) .aggregate(Aggregations.SUM, 1); counts.print(); // execute program env.execute("WordCount Example"); } }

now create an class with name LineSplitter in same package & update this code

package com; import org.apache.flink.api.common.functions.FlatMapFunction; import org.apache.flink.api.java.tuple.Tuple2; import org.apache.flink.util.Collector; public class LineSplitter implements FlatMapFunction<String, Tuple2<String, Integer>> { private static final long serialVersionUID = 1L; @Override public void flatMap(String value, Collector<Tuple2<String, Integer>> out) { // normalize and split the line into words String[] tokens = value.toLowerCase().split("\\W+"); // emit the pairs for (String token : tokens) { if (token.length() > 0) { out.collect(new Tuple2<String, Integer>(token, 1)); } } } }

now run this project with this command

mvn clean package

after successful run it will create an jar file in targer folder of project.

now go to this directry of flink

/Downloads/flink-1.6.0/bin$

and run project with this command

./flink run /home/rahbar/Documents/forblog/1.0/target/1.0-1.0.jar

it will gives result like

it will calculate number of word that present in that file

(accesses,1)

(allwindowfunction,1)

(amount,22)

(apache,1)

(api,1)

(attempt,1)

(bean,1)

(beancreationexception,1)

(beans,1)

(btc,8)

(cancelling,1)

(class,3)

(common,3)

Thanks

If You have any query regarding this blog then leave comment.

Cookies are important to the proper functioning of a site. To improve your experience, we use cookies to remember log-in details and provide secure log-in, collect statistics to optimize site functionality, and deliver content tailored to your interests. Click Agree and Proceed to accept cookies and go directly to the site or click on View Cookie Settings to see detailed descriptions of the types of cookies and choose whether to accept certain cookies while on the site.

About Author

Rahbar Ali

Rahbar Ali is bright Java Developer and keen to learn new skills.